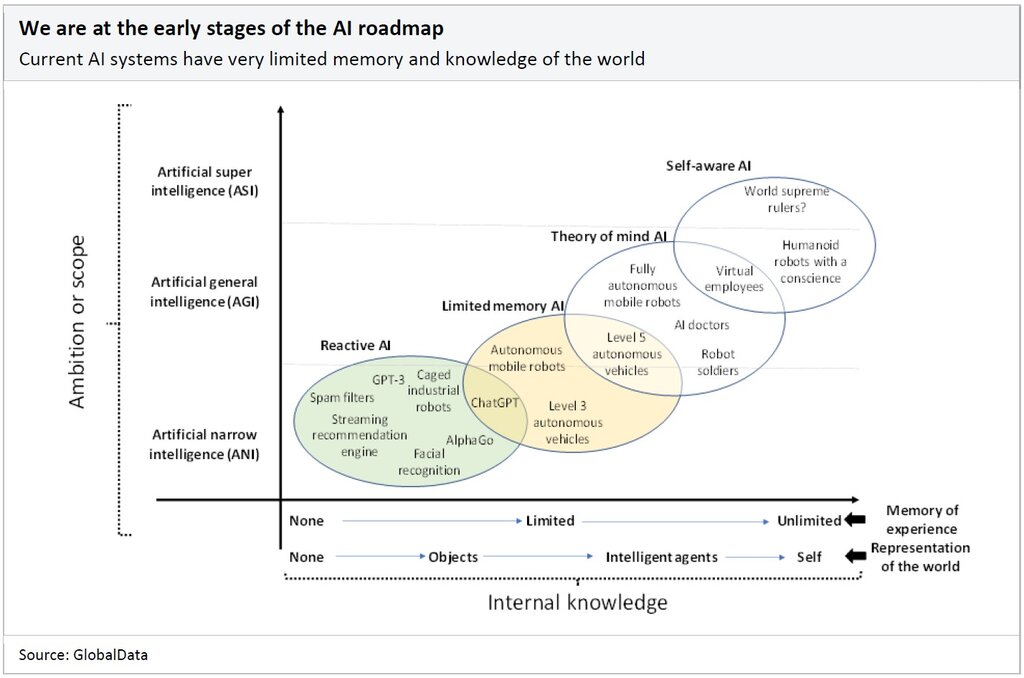

Despite the recent progress in the use of AI in real-world situations, such as facial recognition, virtual assistants, and (to a certain extent) autonomous vehicles (AVs), we are still in the early stages of the AI roadmap.

To understand the different types of AI, it is worth considering the information that systems hold and rely upon to make decisions. This, in turn, defines the range of capabilities and, ultimately, the AI scope. There is a significant difference between a system with no knowledge of the past and no understanding of the world and a system with a memory of past events that has an internal representation of the world and even of other intelligent agents and their goals.

As a result, AI can be classified into four types based on memory and knowledge.

The four types of AI

Reactive AI: This type of intelligence acts predictably based on the input it receives. It does not rely on any internal concept of the world. It can neither form memories nor use past experiences to inform decisions. Examples include email spam filters, video streaming recommendation engines, or even IBM’s Deep Blue, the chess-playing supercomputer that beat international grandmaster Garry Kasparov in the late 1990s.

Limited memory AI: This type of intelligence can use past experiences incorporated into its internal representation of the world to inform future decisions. However, such past experiences are not stored for the long term. A good example is certain functionalities of AVs, which constantly observe other cars’ speed and direction, adding them to their internal preprogrammed representations of the world. These representations also include lane markings, traffic lights, and other essential elements, like curves in the road. With this combination of static information and recent memories, the autonomous vehicle can decide whether to accelerate or slow down, change lanes, or turn.

Theory of mind AI: This type of intelligence, which has not yet been successfully implemented, involves a more advanced internal representation of the world, including other intelligent agents or entities with their own goals that can affect their behavior. Following the prior example of the AV, to anticipate the intention of another driver to change lanes, they must hold an internal representation of the world that includes the other driver's state of mind. As complex as this may sound, experienced human drivers do precisely that, as they can anticipate, say, a pedestrian that may be about to cross the street suddenly or a distracted fellow driver that might abruptly change lanes without signaling.

In psychology, a system that imputes mental states to other agents by making inferences about them is properly viewed as a theory and thus called a theory of mind because such states are not directly observable, yet the system can be used to predict the behavior of others. Only an AI system with a theory of mind could handle mental states such as purpose or intention, knowledge, belief, thinking, doubt, guessing, pretending, or liking, to name a few.

Self-aware AI: This type of intelligence effectively improves the theory of mind, whereby its representation of the world and other agents also includes itself. Self-aware, conscious systems know about their internal states and can predict the feelings of others by inferring similar internal states to their own. If it sees a person crying, a self-aware AI system could infer that person is sad because that is how their internal state is when they cry.

Placing the various types of AI along a scope continuum is useful for understanding the overall AI landscape, as certain AI systems may fall between the theoretical categories defined above. For instance, it is unclear whether truly autonomous vehicles require a theory of mind to anticipate other drivers’ behavior. Similarly, it may or may not be a requirement for fully autonomous mobile robots operating in a factory alongside humans, as they need to ensure they will not hurt them while operating heavy loads or machinery.

So far, we have only been able to develop AI systems that fall into the reactive or limited memory categories. Achieving theory of mind or self-awareness might be decades away.

Advanced AI capabilities

When building AI systems, a fundamental question is: what capabilities or behaviors make a system intelligent? The first step is to expand on our earlier definition and describe AI as any machine-based system that perceives its environment, pursues goals, adapts to feedback or change, provides information or takes action, and even has self-awareness and sentience.

Certainly, humans and animals in the higher layers of the evolutionary tree can interact with their environment, adapt to its changes, and take action to achieve their goals, such as individual and species survival. Whether animals have self-awareness or ethics is an open debate, but they have sentience.

This is relevant as even a weak AI approach can build systems that behave intelligently but are far from sentient.

There are five categories of advanced AI capabilities:

Human-AI interaction: This includes computer vision and conversational capabilities, as well as less developed artificial senses such as smell, taste, and touch. The latter, also known as haptics, consists of creating an experience of touch by applying forces, vibrations, or motions to the user, and it is crucial in robotics. In this category, we also include types of interactions, such as brain-machine interfaces, that do not typically exist in nature but are interactions between humans and AI.

Decision-making: This includes capabilities that the average person will typically associate with intelligence, such as identifying, recognizing, classifying, analyzing, synthesizing, forecasting, problem-solving, and planning. An example could be a system capable of recognizing symptoms and vital signs for a human patient that can also identify the patient’s medical condition and develop a treatment plan. Both symbolic AI and neural networks can be used to perform these tasks, and it is likely that in the long run, a combination of the two will be required—if not new paradigms altogether.

Motion: This includes the ability to move and interact with the world. Although underappreciated in the early years of AI research, it has become apparent that this is a complex capability that requires significant intelligence. For instance, an autonomous robot operating outdoors must be able to understand its position in three-dimensional space and move toward its destination. As it moves, it needs to analyze the feedback it receives from its sensors and adjust accordingly, as living things do. It has taken many years to build robots capable of walking on uneven terrain that can change as they step on it or grasping fragile objects like an egg or a human hand, something a five-year-old child can do without much effort. This is also intelligence, even if the average person might not appreciate it as such.

Creation: This includes creation capabilities across multiple areas, including audio, video, and text. It is also known as generative AI, and one of the most renowned examples today is OpenAI's ChatGPT, which can write original prose and chat with human fluency. There is a relationship between intelligence and creativity, and it has been the subject of empirical research for decades. An intelligent system should be able to display creation capabilities in multiple areas. For instance, writing original text or music, drawing and painting, designing new structures or products, or even designing new AI systems. Allowing AI to make AI may sound like science fiction, yet researchers at OpenAI, Uber AI Labs, and various universities have worked on it for several years. They see it as a step on the road that one day leads to AGI or even ASI.

Sentience: This includes the emergence of self-awareness or consciousness, which is the ultimate expression of intelligence in an artificial system. However, this is, at the moment, far from achievable. Many neuroscientists believe that consciousness is generated by the interoperation of various parts of the brain, called the neural correlates of consciousness (NCC), but there is no consensus on this view. NCC is the minimal set of neuronal events and mechanisms sufficient for a specific conscious percept. Self-awareness, the sense of belonging to a society, an ethical view of right and wrong, or the greater good are just a few examples of matters that would be dealt with at this AI capability level. While such debates may appear purely philosophical at this stage, they will become more important as intelligent autonomous robots take on more roles in our society, which might involve moral decisions.

AI researchers can become entranced with the development of AI with sentience and even a conscience. However, there is a much better business case for robots with effective interaction and motion capabilities and modest decision-making skills.

GlobalData, the leading provider of industry intelligence, provided the underlying data, research, and analysis used to produce this article.

GlobalData’s Thematic Intelligence uses proprietary data, research, and analysis to provide a forward-looking perspective on the key themes that will shape the future of the world’s largest industries and the organisations within them.